Study No. I for AI Ensemble (2021)

I have been working on possible applications of deep learning techniques in creation and/or performance of new music pieces since around 2017-2018. I made a presentation on the topic in March 2018, at Ircam. Since then, the field has been really fast moving, and I found myself constantly trying to update my methods with cutting edge results, which resulted in me postponing making actual music with what I have learned over the years. With this piece I have finally started applying the knowledge I gathered.

The system is based on Google Magenta's DDSP, Differentiable Digital Signal Processing. The core idea behind DDSP is to treat the instrument sound as a synthesizer, instead of raw audio. Most western instruments have harmonic overtones. Even the ones that deviate from pure harmonic ratios do so by a very small amount. So, it makes sense to model an instrument based on a harmonic additive synthesizer, a band limited noise generator for bow sounds, breath sounds, etc., and a reverb for modeling the resonance of the instrument body. This way, the actual neural network is a recurrent one that learns to control all parameters of these three components given the pitch and amplitude of a monophonic recording of desired instrument.

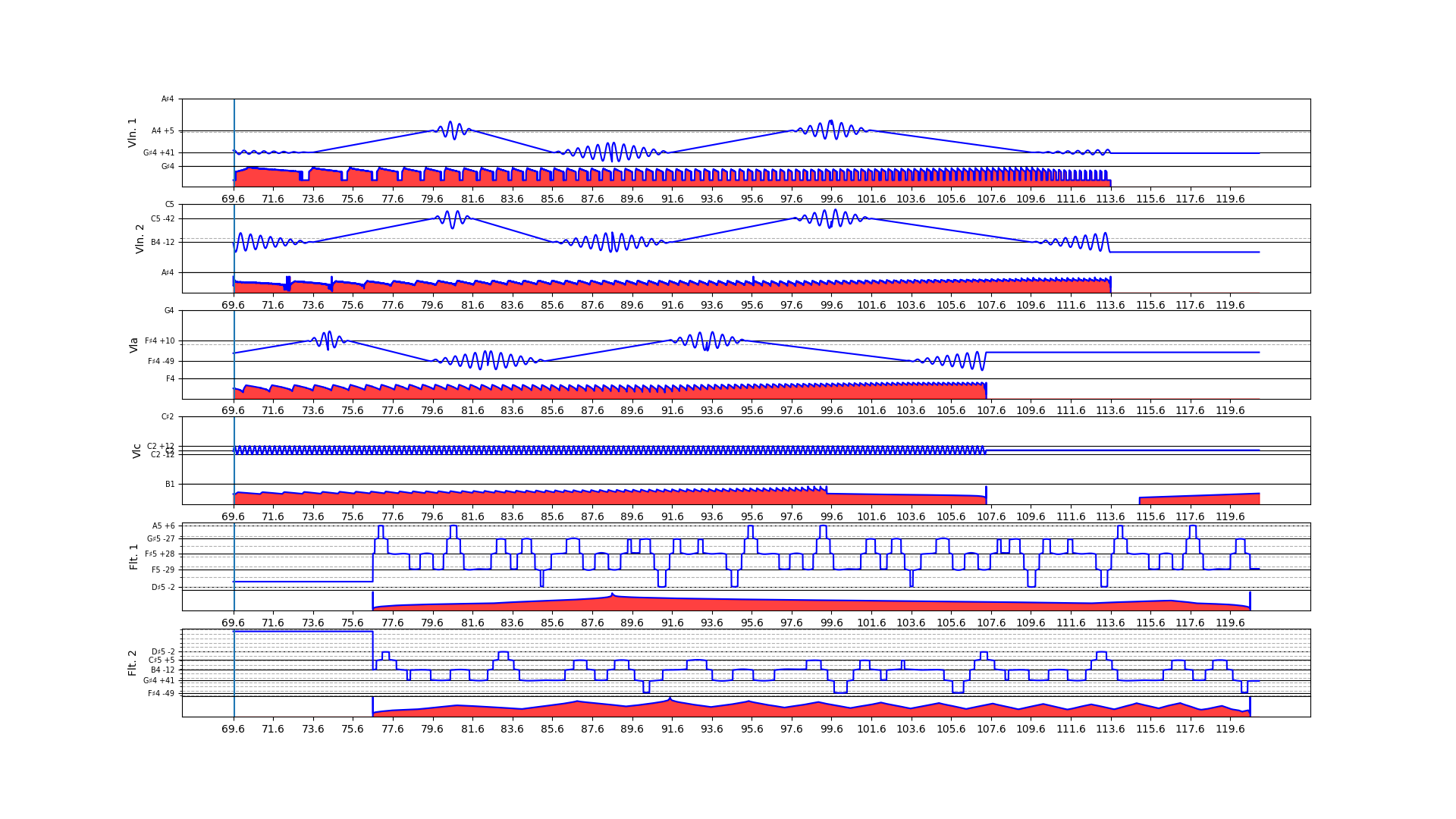

The Magenta team have released pre-trained networks for violin, flute, and a couple of other instruments. I have trained my own networks on viola and cello recordings, and I have composed a 4-minute piece for 2 flutes, 2 violins, viola, and cello, using graphic notation. The code used to generate the piece, and the accompanying video-score is freely available on my GitHub page, although it is a bit of a mess right now. It has two sections. First is based on increasingly deeper fractal elaborations of a simple initial motif, the second is based on stretched harmonic overtones. I hope you enjoy it.